About the Project

If you can think back to any projects you have done that involved manipulating large matrices of data that could have been completed in parallel (Machine Learning, QEA, etc.), you probably remember how long those projects would take to run. Maybe they even crashed under the weight of your expectations.

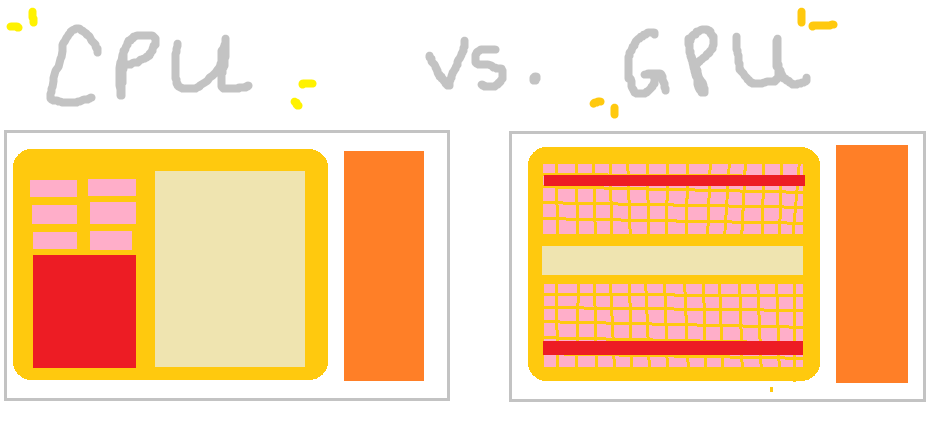

Understanding the performance differences between a Central Processing Unit (CPU) and a Graphics Processing Unit (GPU) helps tremendously to not only process graphics, but also fluid dynamics, cryptography, neural networks, genome mapping, computational finance, and really any two or three dimensional analysis that can be ran in parallel. Knowing how offloading certain tasks to the GPU affects performance is key to reducing the time and power it takes to run these computations. These kinds of tasks are called General-Purpose computing on Graphics Processing Units (GPGPU), and refer to tasks that were historically handled on the CPU that can now be handled on the GPU.

For our project, our team focused on analyzing the performance of two different Machine Learning algorithms that were designed to run on the CPU and GPU. To perform these computations our team used CUDA, a closed-source program developed by Nvidia that contains hardware and software support for General-Purpose computing on Nvidia GPUs. The algorithms were ran several times on the CPU vs. the CPU and GPU with sample datasets changing in size. Other variables such as the GPU core temp, the CPU core temp, Power draw, Memory usage, and CPU/GPU usage were monitored.

Our findings explain the architectural differences between the CPU and GPU, our complete methodology, the hardware and software support of CUDA, and possible explanations for the runtime differences in both scripts over the CPU vs. the CPU and GPU. We chose to package our most valuable information from our learning into a Zine . You can view it here or by clicking the Zine tab on the top of the page.

Most of our in-detail Documentation is written up on Notion. You can view our documentation here or by clicking on the Documentation tab.

For those who are interested in contributing to this project, you can learn more on how to build on it by clicking here or clicking on the Contributing tab above.